Nuclear fusion, the process that powers our sun and other stars, is considered by many the ‘holy grail’ of energy supply. Why is that so? The numbers tell the story.

The basic physics of fusion is well known and easily understood: when light elements (lighter than iron) are forced together under extreme conditions of pressure and temperature they will fuse – i.e., form a heavier element than either that is lighter than the combined mass of the two fusing elements. The mass that is apparently ‘lost’ is converted to energy according to Einstein’s famous equation E=mc2 (i.e., c squared).

It turns out that so much energy is released in this process (a simple, back-of-the-envelope calculation is shown below) that if the process can be harnessed on earth an unlimited source of energy is available. Fusion has other advantages, as well as serious technological problems which are also discussed below. First, why are the numbers so intriguing?

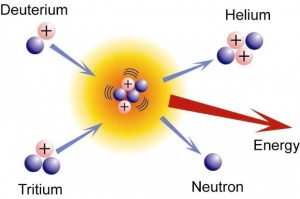

While many fusion reactions are possible and take place in stars, most attention has been directed to the deuterium-tritium (D-T) fusion reaction that has the lowest energy threshold. Both deuterium and tritium are heavier, isotopic forms of the common element, hydrogen. Deuterium is readily available from seawater (most seawater is two parts ordinary hydrogen to one part oxygen; one out of every 6,240 seawater molecules is two parts deuterium to one part oxygen). Tritium supplies do not occur in nature – it is radioactive and disappears quickly due to its short half-life – but can be bred from a common element, lithium, when exposed to neutrons.

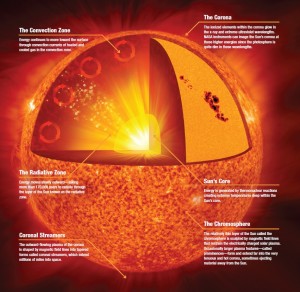

D-T is also the reaction that largely powers our sun (but not exclusively), routinely converting massive amounts of hydrogen into massive amounts of helium and releasing massive amounts of energy.

It has been doing this for more than four billion years and is estimated to continue doing this for about another five billion when the hydrogen supply will finally dwindle. At this latter point the fusion reactions in the core of the sun will no longer be able to offset the gravitational forces acting on the sun’s very large mass and the sun will explode as the Crab Nebula did in 1054. It will then expand and swallow up the earth and other planets. Take heed!

To understand the numbers: every cubic meter of seawater, on average, contains 30 grams of deuterium. There are 300 million cubic miles of water on earth, 97% in the oceans.

Each deuterium nucleus (one proton + one neutron) weighs so little (3.3 millionths of a trillionth of a trillionth of a kilogram) that these 30 grams amount to close to a trillion trillion nuclei. Each time one of these nuclei is fused with a tritium nucleus (one proton + two neutrons) 17.6 MeV (millions of electron volts) of energy is released which can be captured as heat. Now MeV sounds like a lot of energy but it isn’t – a Btu, a more common energy unit, is 6.6 thousand trillion MeV).

Now this is a lot of numbers, some very small and some very large, but taking them all together that cubic meter of seawater can lead to the production of about 7 million kWh of thermal energy, which if converted into electricity at 50% efficiency corresponds to 3.5 million kWh. If one were to convert the potential fusion energy in just over one million cubic meters of seawater (about 3 ten thousandths of a cubic mile) one could supply the annual U.S. electricity production of 4 trillion kWh – and remember that our oceans contain several hundred million cubic miles of water. This is why some people get excited about fusion energy.

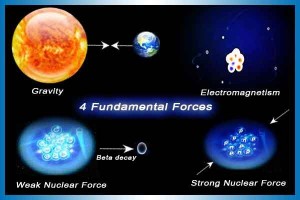

Unfortunately, there are a few barriers to overcome, starting with how to get D and T, both positively-charged nuclei, to fuse. The positive electrical charges repel one another (the so-called Coulomb Barrier) and you have to bring the distance between them to an incredibly small number before the ‘strong nuclear force’ can come into play and allow creation of the new, heavier helium nucleus (two protons + two neutrons). It is this still mysterious force that holds protons and neutrons together in our various elements.

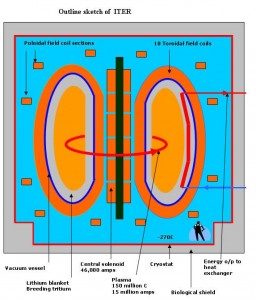

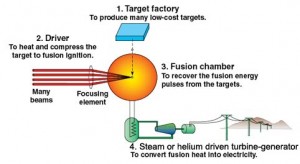

So how does one bring these two nuclei close enough together to allow fusion to occur? The answer in the sun is enormous gravitational pressure and temperature, which we cannot reproduce on earth. The pressures in the sun are beyond our ability to achieve in any sustained way but the temperatures are not (temperature is a way of characterizing a particle’s kinetic energy, or speed) and fusion research is focused on achieving extremely high temperatures (100’s of millions of degrees or higher) at achievable high pressures. The fact that this is not easy to achieve is why fusion energy is always a ways in the future. Two techniques are the focus of global fusion research activities – magnetic confinement (as in tokamaks and Iter) and inertial confinement (as in laser-powered or ion beam-powered fusion) – see, e.g., http://www.world-nuclear.org/info/Current-and-Future-Generation/Nuclear-Fusion-Power. Several hundred billion US$ a year are being spent on these activities, mostly in international collaborations.

Fusion on earth has been achieved but not in a controlled manner, and only in very small amounts and for very short time periods with one exception, the hydrogen bomb. This is an example of an uncontrolled fusion reaction (triggered by an atomic bomb) that releases a large amount of energy in a few millionths of a second. As the French physicist and Nobel laureate Pierre-Gilles de Gennes once said: “We say that we will put the sun in a box. The idea is pretty. The problem is, we don’t know how to make the box.”

The pros and cons of fusion energy can be summarized as follows:

Pros:

– virtually limitless fuel availability at low cost

– no chain reaction, as in nuclear fission, and so it is easy to stop the energy release

– fusion produces no greenhouse gases and little nuclear waste compared to nuclear fission (the radioactive waste from fusion is from neutron activation of elements in its containment environment)

Cons:

– still unproven, at any scale, as controlled reaction that can release more energy than required to initiate the fusion (‘ignition’)

– requires extremely high temperatures that are difficult to contain

– many serious materials problems arising from extreme neutron bombardment

– commercial power plants, if achievable, would be large and expensive to build

– at best, full scale power production is not expected until at least 2050

Where do I come out on all this? I am not trained as a fusion physicist (just as a low temperature solid state physicist) and so lack a close involvement with the efforts of so many for so long to achieve controlled nuclear fusion, and the enthusiasm and positive expectations that inevitably result. Nevertheless, I support the long-term effort to see if ignition can be achieved (some scientists believe Iter is that critical point) and if the many engineering problems associated with commercial application of fusion can be successfully addressed. In my opinion the potential payoff is too big and important for the world to ignore. In fact I was once asked for my advice on whether the U.S. Government should support fusion R&D by a member of the DOE transition team for President-elect Carter, and my answer hasn’t changed.